Gradient Descent (GD)

Gradient Descent (GD) is fundamental in training neural networks, enabling them to learn from data by updating the weights of the network to minimise the error between the predicted output and the actual target [RHW86]. GD is an optimisation algorithm that minimises the loss of a predictive model by iterating over a training dataset. Back-propagation, an automatic differentiation algorithm, calculates gradients for the weights in a neural network. Together, GD and back-propagation are used to train neural network models by continuously updating the model’s parameters to reduce errors.

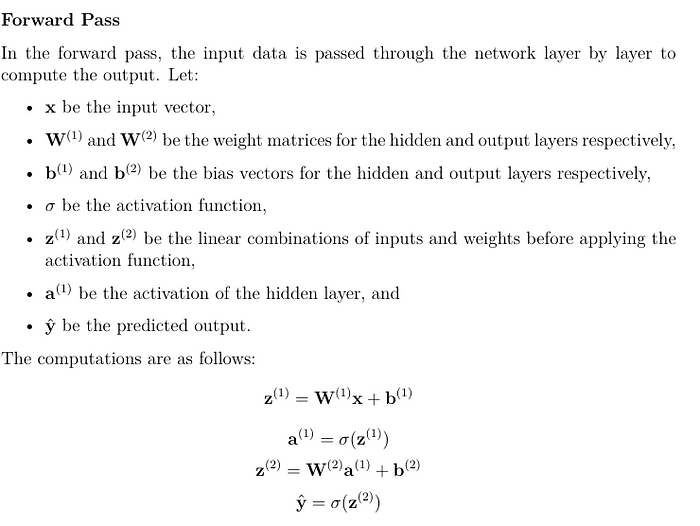

Goodfellow et al. [GBC16] explain the learning process of neural networks as follows. Consider a simple feedforward neural network with an input layer, one hidden layer, and an output layer. Backpropagation computes the gradient of the loss function with respect to each weight. The process can be divided into two phases, the forward pass and the backward pass.

GDandbackpropagation is a powerful and efficient method for training neural networks. By iteratively updating the network’s weights to minimise the loss function, it enables the network to learn complex patterns in data.

Happy coding ..!!